Using A/B Testing in Marketing takes center stage, inviting you into a world of savvy strategies and data-driven decisions. Dive into the realm of marketing optimization with A/B testing and watch your campaigns soar to new heights.

In this guide, we’ll explore the ins and outs of A/B testing, from setting up tests to analyzing results and best practices for success. Get ready to revolutionize your marketing game!

Introduction to A/B Testing

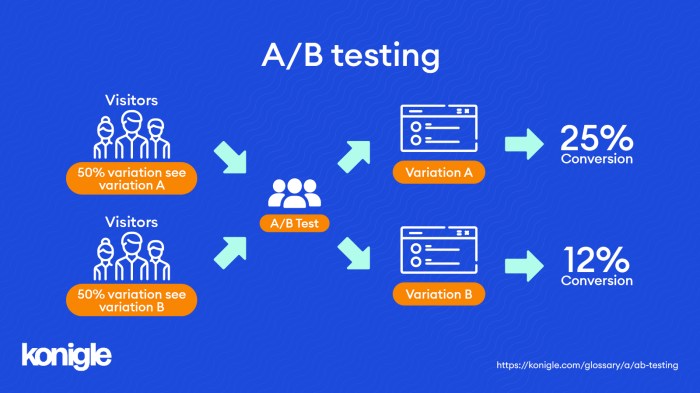

A/B testing, also known as split testing, is a marketing strategy that involves comparing two versions of a webpage, email, or other marketing asset to determine which one performs better. This method helps marketers make data-driven decisions to optimize their campaigns and improve overall performance.

The Purpose of A/B Testing in Marketing

A/B testing is crucial in marketing campaigns as it allows marketers to identify the most effective elements that resonate with their target audience. By testing different variations of content, design, or calls-to-action, marketers can determine what drives higher engagement, click-through rates, and conversions. This data-driven approach helps in making informed decisions to enhance marketing strategies and achieve better results.

- For example, an e-commerce company can use A/B testing to analyze two different product page layouts to see which one leads to more purchases.

- A software company can test two variations of a promotional email to determine which subject line generates higher open rates.

- A social media platform can experiment with different ad creatives to discover which one drives more clicks and conversions.

Setting Up A/B Tests

When setting up an A/B test, there are several key steps to follow to ensure accurate results and meaningful insights. It’s crucial to approach the process methodically and strategically to get the most out of your testing efforts.

Selecting the Right Variables to Test

- Identify clear objectives: Before setting up an A/B test, define what you want to achieve and what specific metrics you are looking to improve.

- Choose relevant variables: Select variables that directly impact the outcome you are testing. Focus on elements that are likely to have a significant impact on user behavior or engagement.

- Avoid testing multiple variables at once: To accurately measure the impact of changes, it’s important to isolate variables and test them individually. Testing multiple variables simultaneously can lead to ambiguous results.

- Consider the significance of the test: Ensure that the variables you choose to test are meaningful and have the potential to drive substantial improvements in your marketing efforts.

Creating a Controlled Environment

- Use random assignment: Randomly assign participants to different variations to reduce bias and ensure that the results are statistically significant.

- Set a clear timeframe: Define a specific time period for the test to run to avoid external factors influencing the results.

- Avoid external interference: Minimize external factors that could impact the test results, such as changes in market conditions or unexpected events.

- Monitor the test closely: Regularly track the progress of the test and make adjustments as needed to maintain the integrity of the experiment.

Analyzing A/B Test Results

When it comes to analyzing A/B test results, it’s crucial to understand how to interpret the data effectively to make informed marketing decisions. By identifying statistically significant outcomes and evaluating the implications of different results, marketers can optimize their strategies for better performance.

Interpreting A/B Test Results, Using A/B Testing in Marketing

- Compare Conversion Rates: Look at the conversion rates of the control group (A) and the variant group (B) to see which one performed better.

- Analyze Statistical Significance: Use statistical tools like p-values and confidence intervals to determine if the results are statistically significant.

- Consider Sample Size: Ensure that the sample size is large enough to draw meaningful conclusions from the data.

Identifying Statistically Significant Outcomes

- Statistical Tools: Utilize A/B testing tools or statistical software to calculate significance levels and confidence intervals.

- Confidence Intervals: Check if the confidence intervals overlap or if there is a clear difference between the control and variant groups.

- P-values: Look for p-values below a certain threshold (commonly 0.05) to indicate statistical significance.

Implications for Marketing Strategies

- Positive Outcome: If the variant group performs significantly better, consider implementing the changes across your marketing campaigns for improved results.

- Negative Outcome: If the control group outperforms the variant, reassess your changes and test alternative strategies to achieve better outcomes.

- No Significant Difference: If there is no clear winner between A and B, explore other factors that may impact the results and conduct further testing to refine your strategies.

Best Practices for A/B Testing: Using A/B Testing In Marketing

When it comes to A/B testing, following best practices is crucial to ensure accurate results and actionable insights. Here are some common best practices to keep in mind:

Consistent Testing Variables

- Ensure that only one variable is changed at a time in each A/B test to accurately determine the impact of that specific change.

- Keep other elements of the test consistent to isolate the impact of the variable being tested.

Sufficient Sample Size

- Make sure to have a large enough sample size to ensure statistical significance in the results.

- Calculate the required sample size before conducting the test to avoid drawing conclusions from insufficient data.

Clear Hypotheses

- Define clear hypotheses before running the A/B test to guide the testing process and interpretation of results.

- Ensure that the hypotheses are specific, measurable, and aligned with business goals.

Continuous Monitoring

- Monitor the test results regularly to detect any anomalies or issues that may affect the validity of the results.

- Be prepared to make adjustments during the test if necessary to improve the accuracy of the results.

Document and Analyze Results

- Document the test setup, variables, and results to facilitate analysis and future reference.

- Analyze the results comprehensively, considering both quantitative and qualitative data to gain a holistic understanding of the outcomes.

Prominent Brand Examples

Many prominent brands have successfully implemented A/B testing strategies to optimize their marketing efforts. For example, Netflix uses A/B testing to refine its recommendation algorithms and personalize user experiences. Amazon conducts A/B tests on its website layout and features to enhance user engagement and conversion rates. Google constantly tests different ad formats and placements to maximize ad performance and revenue.